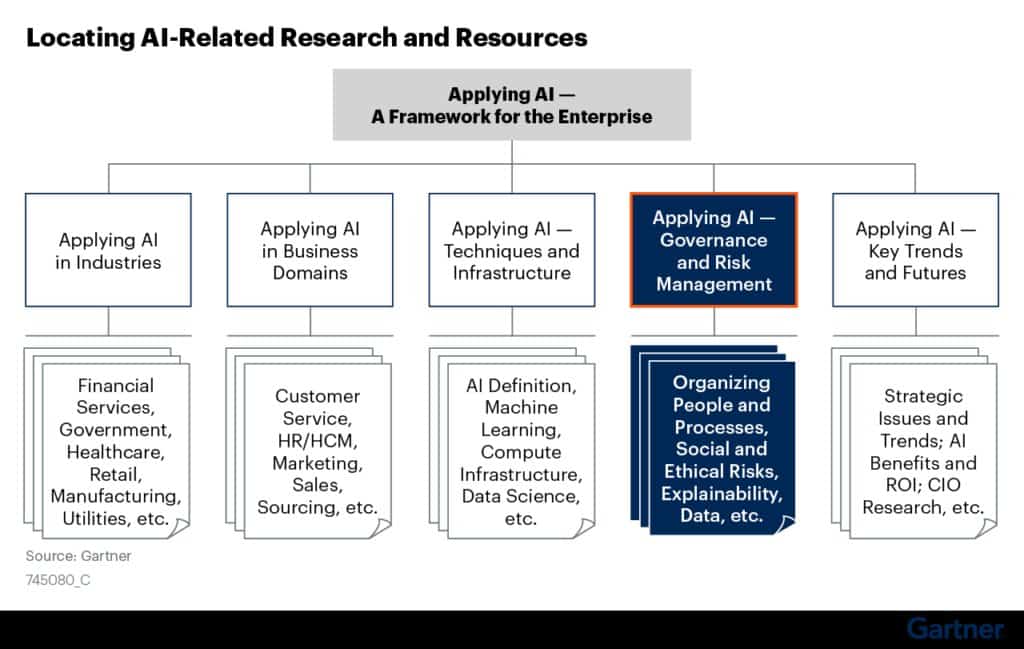

Artificial Intelligence is a broad and murky subject to many observers. Gartner analyst Bern Elliot and several colleagues just published Applying AI — A Framework for the Enterprise to try and bring clarity to the various dimensions of this topic. Figure 1 shows the five main areas of Gartner coverage, one of which is AI Governance and Risk Management, likely the murkiest area amongst the lot.

Figure 1: Applying AI – A Framework for the Enterprise

“AI trust, risk, and security management pose new operational requirements that are not well understood. Conventional controls and organizational processes do not sufficiently ensure AI’s trustworthiness, reliability, and security.”

3 primary reasons why AI risks are not adequately addressed in most organizations

Organizational fragmentation — Ownership of this domain is spread across functions such as legal, compliance, AI development, enterprise architecture, privacy, and security.

Production-first mentality — Most enterprises struggle to get their AI models into production; building trust and risk management into AI life cycles is an afterthought.

Enterprises are not convinced it’s needed — Typically, there is no readily available proof of benign mistakes or adversarial attacks causing suboptimal or ineffective AI model performance. Most enterprises are not actively managing and monitoring AI models after deployments, so they don’t even know if these issues exist.

See Applying AI — Governance and Risk Management

Industry report confirmation

In fact, our conclusions are borne out by a recent report from FICO and Corinium – “The State of Responsible AI” – that finds most companies deploy AI at significant risk. Amongst their findings:

- 65% of respondents’ companies can’t explain how specific AI model decisions or predictions are made

- 73% have struggled to get executive support for prioritizing AI ethics and Responsible AI practices

- Only one-fifth (20%) actively monitor their models in production for fairness and ethics

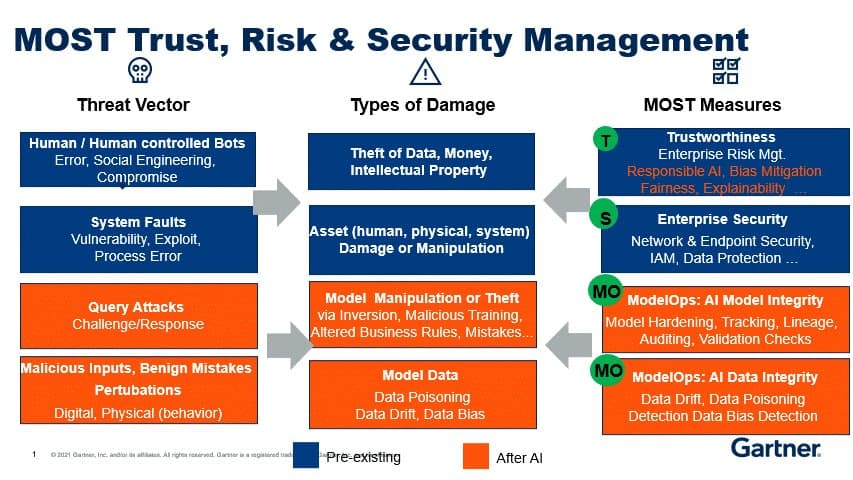

Market Guide for AI TRiSM

We are about to publish our first Market Guide for AI Trust Risk and Security Management (AI TRiSM). The Market Guide covers solutions that help ensure reliable effective model performance, and which mitigate substantial business risks that exist when adequate management is lacking. These solutions implement the MOST measures in our MOST framework, as depicted below:

Figure 2: MOST Framework

However, before solutions are implemented, organizations must organize to undertake the requisite AI TRiSM activities. This is a difficult undertaking since ownership of the domain is spread across multiple functions.

But that doesn’t make it any less important or urgent. If anything, the converse is true.

“Diffuse management responsibilities generally means that a lot of issues are already falling through the organizational cracks – i.e., no one is paying attention to them.”

It doesn’t have to be this way, nor should it. Our future depends on the reliability and trustworthiness of AI. Hopefully, we will all start to take it more seriously.

First published on Gartner Blog Network