Just two years ago, boardrooms across Southeast Asia and Hong Kong buzzed with anxiety over AI's disruptive potential. Would competitors leap ahead? Would opaque algorithms trigger regulatory backlash?

Today, in late 2025, that fear has crystallised into a strategy.

As Russell Fishman, senior director of product management at NetApp, observes: "The regulatory environment is evolving rapidly… Clear rules give organisations confidence by defining what 'right' looks like, reducing uncertainty, and accelerating adoption."

In a region where regulatory landscapes remain fragmented—Singapore's Model AI Governance Framework, Thailand's draft AI ethics guidelines, and the EU AI Act's extraterritorial reach—forward-looking CIOs are no longer waiting for harmonisation.

Instead, they are building systems that anticipate compliance, turning governance into a differentiator. This shift has given rise to what industry insiders now term "The Governed Advantage": the ability to scale AI responsibly while earning the trust of customers, regulators, and investors alike.

The real bottleneck to scaling AI

Despite the hype around GPUs and large language models, CIOs on the ground report a sobering reality: the primary constraint isn't compute—it's data. Fishman underscores this: "GPUs can be viewed as the engine, but data is the fuel—and raw data is not sufficient. It must be cleaned, refined, structured and delivered appropriately to support AI systems."

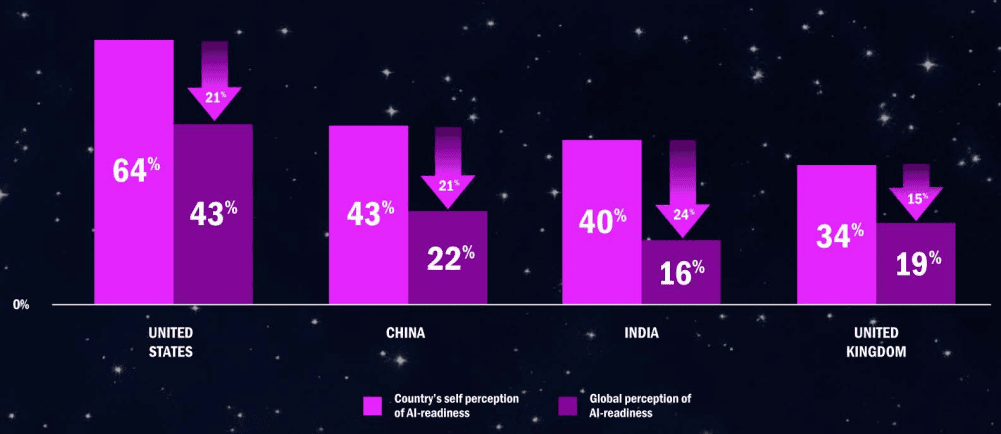

NetApp's 2025 AI Space Race survey reveals a stark truth: while 81% of organisations (editor: surveyed countries are China, India, UK and US) are piloting or scaling AI, only 15% report tangible success. The gap, Fishman explains, stems from a fundamental misconception.

"Organisations across the region and globally possess large volumes of data, but only those investing in making it AI-ready—governed, high quality and accessible—are realising outcomes." Russell Fishman

This challenge is especially acute in Asia, where legacy systems, multilingual unstructured data (from customer service logs to regional compliance documents), and siloed operations compound data fragmentation.

As generative and agentic AI increasingly rely on emails, design files, video, and code repositories—formats historically ignored by traditional data warehouses—CIOs are now racing to retrofit governance onto data ecosystems never designed for AI.

Regulation as a strategic enabler

Contrary to early perceptions, regulation is emerging not as a brake but as an accelerator. Fishman argues that "regulation levels the playing field by creating an opportunity to accelerate AI with a clearer path to ROI."

With frameworks like Singapore's AI Verify toolkit and the impending ASEAN Guide on AI Governance and Ethics, CIOs can now justify AI investments with stronger risk-mitigation narratives.

Moreover, regulation is driving vendor innovation. "Because regulation hasn't properly existed until now, vendors have tried to cover every possible scenario," Fishman notes.

"As frameworks mature, you'll see providers like NetApp focus on specific capabilities that help organisations stay compliant as they build data pipelines and manage sensitive information like PII." Russell Fishman

This alignment between policy and product enables CIOs to embed compliance by design—rather than bolt it on post-deployment. For instance, intelligent data infrastructure can now auto-classify personally identifiable information (PII), enforce retention policies, and maintain audit trails across hybrid environments, satisfying both GDPR-style requirements and local mandates like Indonesia's PDP Law.

Building adaptive, living AI strategies

By 2026, static AI roadmaps will be obsolete. The pace of technological change—exemplified by the rapid evolution from generative to agentic AI—demands strategies that are fluid, resilient, and continuously updated. Fishman advises CIOs to treat their AI strategy as a "living plan," anchored by three pillars:

- Embedded compliance from day one, tailored to jurisdiction-specific data and privacy rules.

- End-to-end visibility across the AI lifecycle— "from data ingestion to training, inference and archiving"—enabled by robust metadata management and data lineage.

- Cyber resiliency, where intelligent infrastructure automatically protects sensitive AI-generated datasets against threats like ransomware or data poisoning.

This approach allows organisations to pivot quickly as new regulations emerge, or model architectures evolve—without sacrificing security or governance.

Enabling innovation safely with sandboxes

One of the most delicate balancing acts for CIOs is fostering innovation while maintaining control. Data scientists and developers, Fishman notes, "aren't bad actors. They're just focused on building and experimenting, not on compliance or security." Yet, what works in a lab often fails in production when CISOs intervene.

The solution? A governed "sandbox" environment. "CIOs need to provide a safe space where innovation can happen without risking security, data misuse or regulatory breaches," Fishman explains. Intelligent data infrastructure can enforce policies in the background—masking PII, logging access, and preventing exfiltration—so that innovation proceeds at speed, without creating vulnerabilities.

Companies in financial services across Singapore and Hong Kong are already deploying such environments for use cases like real-time fraud detection and dynamic credit scoring, where model accuracy must be matched by explainability and auditability.

Governance as the foundation of AI value

As 2026 approaches, the message from Asia's CIOs is clear: trust is the new currency of AI. Efficiency alone no longer suffices. Investors now scrutinise AI ethics frameworks as rigorously as they do financial statements, and consumers increasingly favour brands that demonstrate responsible AI use.

For Fishman, the path forward is unequivocal: "AI is getting more sophisticated, and the risks are increasing… The priority is to invest in flexible, future-ready data environments with governance built-in."

In a region defined by diversity and dynamism, the winners will be those who operationalise trusted AI—not as a compliance checkbox, but as a core capability woven into every layer of their data and decision-making infrastructure. In doing so, they won't just meet regulatory requirements; they'll earn the lasting trust of the markets they serve.

Click on the PodChats player to hear Fishman share his views on how we can achieve the operationalisation of trusted AI in 2026.

- Name the most important characteristics of a trusted AI as viewed from the POV of a CIO?

- What are the top 3 drivers pushing enterprises in the region to accelerate trusted AI adoption?

- What are businesses in the region doing right (and wrong) in their current approach to responsible AI?

- Decades into what we can refer to as modern computing, why do we continue to have a data problem?

- The Chinese philosophy is that for every risk there is an opportunity. Why should CIOs see the evolving regulatory landscape in SEA+HK as an opportunity?

- Given the evolving nature of technology and regulation, how should a CIO approach the company's AI strategy to ensure it is adaptive, scalable, and sustainable?

- How should CIOs architect their AI strategy to support innovation while staying compliant?

- What role does technology infrastructure, particularly data management, play in enabling this new era of governed AI?

- Looking ahead to 2026, what is the most critical action a CIO should take now?