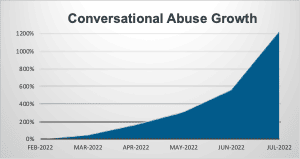

Conversational scams that deployed a range of platforms, including SMS, messaging apps and social media, have grown by 1,200% in the past year, according to research data analysed by cybersecurity firm Proofpoint.

This growth has seen conversational threats become the highest category of mobile abuse by volume, overtaking package delivery, impersonation and other kinds of fraud in some verticals.

According to Proofpoint, growth in conversational threats shows no sign of slowing and has continued through Q1 2023.

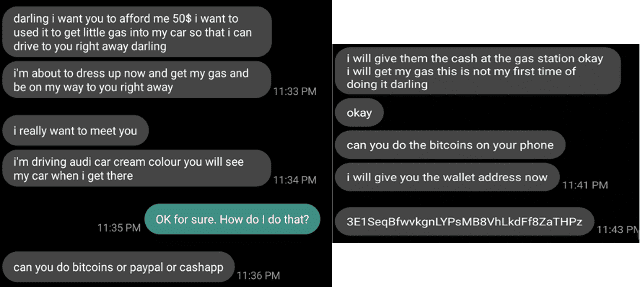

Adam McNeil, senior threat research engineer at Proofpoint, said: "Conversational abuse through text and social media is particularly concerning because threat actors spend time and effort (often weeks) building trust with their targeted victims by striking up what starts out as a benign, innocuous messaging conversation designed to trick them, thus circumventing technical and human defences.”

“There are many variations of these attacks and mobile users should be very skeptical of any messages from unknown senders, especially considering how artificial intelligence tools are making it possible for threat actors to make their attacks more realistic than ever."

Adam McNeil, Proofpoint

Ultimately, these attacks are a manifestation of social engineering. Skilled manipulators take advantage of ubiquitous mobile communications to cast their net wide and land as many victims as they can.

Pig butchering operations

Last year, these conversational scams now widely known as pig butchering (a term which originated in China) have victimised countless of people globally in operations that involve a complex web of job fraud, human trafficking and online cryptocurrency fraud.

Thousands of people – most of them from Southeast Asia – have been lured by social media advertisements promising well-paid jobs in Cambodia, Laos and Myanmar, only to find themselves divested of their passport, identity documents and mobile phone. They were taken to large compounds and forced to work 18-hour days to swindle strangers worldwide through various messaging and social media platforms.

Law enforcement authorities of multiple countries have said the pig butchering operations are being run by Chinese gangsters with ties to gambling across Southeast Asia and are trying to recover losses incurred during the pandemic lockdowns.

“The fact that attackers have adopted conversational lures in email and mobile, and across both financially motivated and state-sponsored attacks suggests that the technique is effective,” said McNeill.

“Society’s receptivity to mobile messaging makes it an ideal threat vector, as we tend to read new messages within minutes of receiving them.”

Adam McNeil, Proofpoint"

In the US, the FBI has recently noted a sharp increase in the number of victims of conversational scams, losing more than US$3 billion to cryptocurrency scams, of which pig butchering is now a leading example. And of course, romance scams, job fraud and other long-standing forms of conversational attack are still fixtures in the threat landscape.

“In addition to financial losses, these attacks also extract a significant human cost. Pig butchering and romance scams both involve an emotional investment on the part of the victim. Trust is earned and then abused, which can prompt feelings of shame and embarrassment alongside the real-world consequence of losing money," said McNeil.

AI will take conversational scams to the next level

With recent advances in generative AI, conversational scammers may not need human help much longer, according to McNeil.

The release of tools like ChatGPT, Bing Chat and Google Bard heralds the arrival of a new kind of chatbot, capable of understanding context, displaying reasoning, and even attempting persuasion.

“And looking further ahead, AI bots trained to understand complex tax codes and investment vehicles could be used to defraud even the most sophisticated victims.”

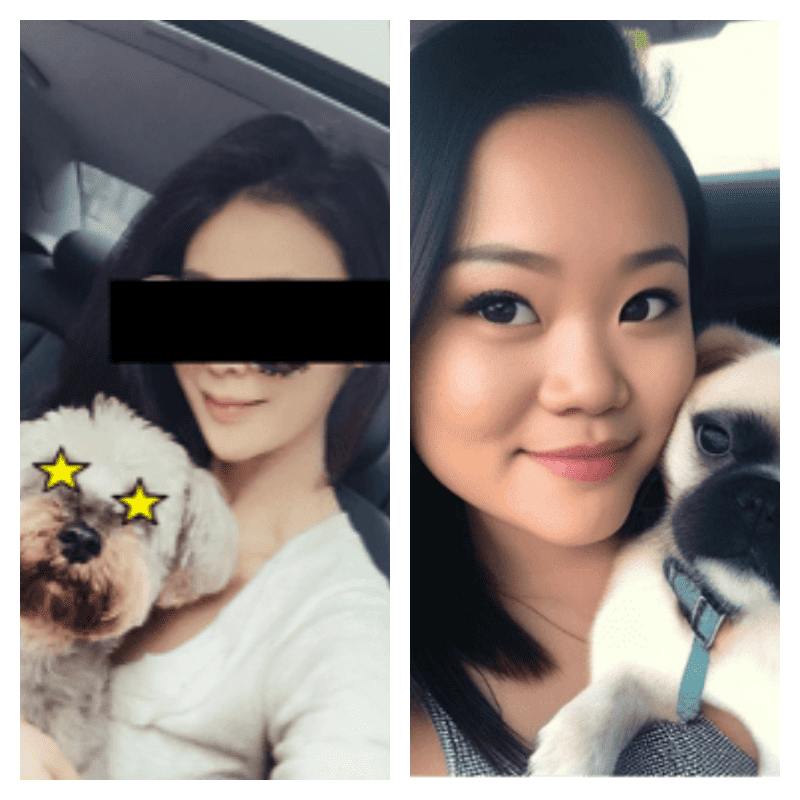

Coupled with image generation models capable of creating unique photos of real-seeming people, McNeil noted that conversational threat actors could soon be using AI as a full-stack criminal accomplice, creating all the assets they need to ensnare and defraud victims.

“And with advances in deepfake technology, which uses AI to synthesise both audio and video content, pig butchering could one day leap from messaging to calling, increasing the technique’s persuasiveness even more.”

Adam McNeil, Proofpoint