The IEEE defines a robot as an autonomous machine capable of sensing its environment, carrying out computations to make decisions, and performing actions in the real world.

An internet bot is a software application that performs automated tasks by running scripts over the internet.

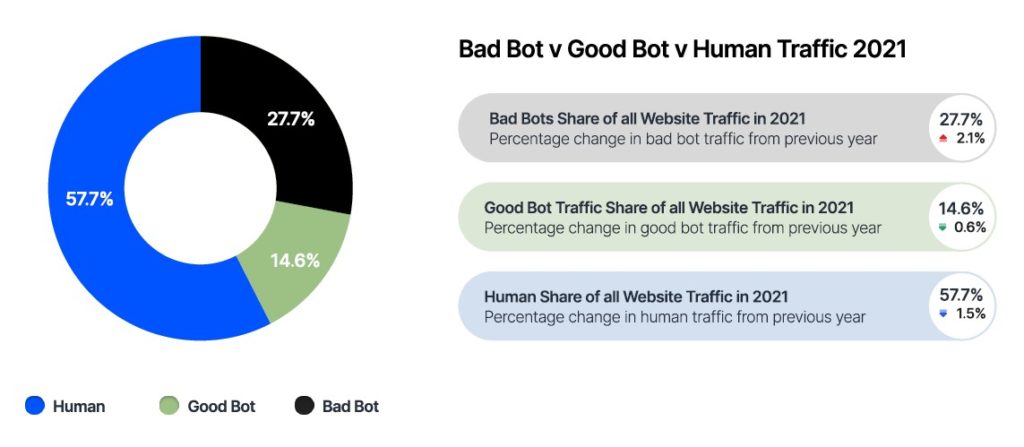

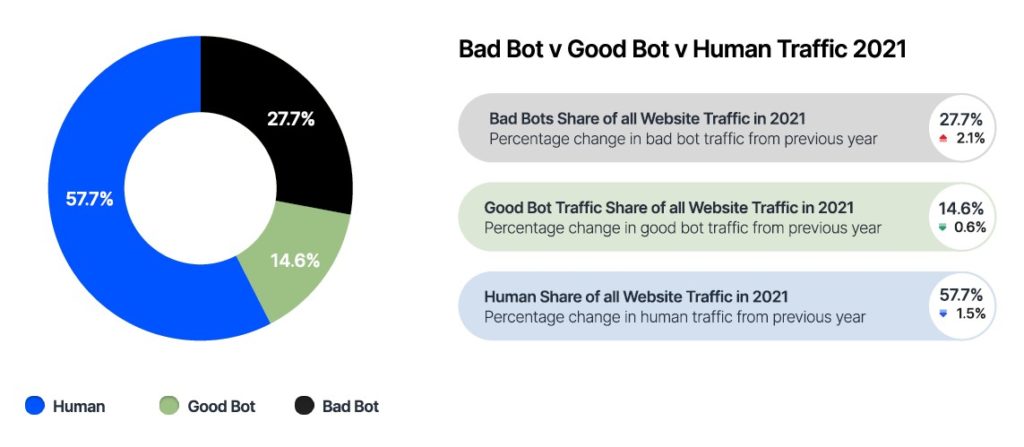

The 2021 Barracuda report, Bot Attacks: Top Threats and Trends, acknowledged that there are good bots and bad ones: “While some bots, such as search engine crawlers, are good, bad bots are built to carry out malicious attacks at scale.”

The 2022 Imperva Bad Bot Report goes on to explain that bad bots mask themselves and attempt to interact with applications in the same way a legitimate user would, making them harder to detect and block. “Bots enable high-speed abuse, misuse, and attacks on your websites, mobile apps, and APIs. They allow bot operators, attackers, unsavoury competitors, and fraudsters to perform a wide array of malicious activities,” added the report.

Reinhart Hansen, director of technology with the office of the CTO at Imperva, says the intent of the bot determines whether it is a good bot or a bad one. “An example of a bad bot would be something like a content scraping bot, which scrapes product content and pricing information of your website for competitive intelligence and so they can undercut you,” he continued.

Attack of the bad bots

To avoid detection, Jamie Boote, software security consultant at Synopsys Software Integrity Group, says bots must continually evolve. He goes on to explain that their executables are constantly being changed and recompiled to evade anti-virus detection.

“Their methods have also had to evolve to evade DDOS detection with low-volume attacks replacing the virtual flood of packets that internet service providers and content distributors can easily detect and mitigate,” he continued.

“When it comes to questions of “better” or “worse”, it doesn’t get much direr than having a piece of malware on your system that obeys an attacker command and not yours.”

Jamie Boote

Hansen observed that the pandemic saw a big rush for many to improve their online presence so that they can stay in business. This has led to many applications being developed, much more APIs being used, and exposure to a lot of new data on the internet.

“Bad bots are used to harvest this newly exposed data for identity theft or fraudulent activities, so we have been seeing a steady increase in bad bot traffic,” he continued.

For his part, Boote added that the pandemic also coincided with a few other trends that contributed to the rise of botnets. As smart devices such as watches, thermostats, plugs, doorbells, cars, tractors, and everything else that can connect to the internet was connected to the internet via 5G, botnets found new victims to host their malware.

“Botnets increasing in size by taking advantage of new victim devices in new places combined with increased reliance on remote-work and internet-driven retail services saw increased impact from botnet attacks during the pandemic,” he added.

Mitigating the risks of bad bots

Because bots are, by design, meant to run unsupervised, in the background performing a specific task, this has made it very challenging to detect them.

Reinart added that the difficulty of detection lies in the fact that bots do their best to mimic human behaviour to avoid detection.

“They are also typically distributed in nature, constantly changing the source of their IP address. Some bot operators run emulated bots in a farm, pretending to be thousands of legitimate mobile apps and devices.”

Reinhart Hansen

“Such techniques can be countered by embedding specific technology within an app to detect whether it's being run in a mobile emulation environment instead of on a legitimate device,” he added.

The Forrester report, The Forrester Wave: Bot Management, Q2 2022, Sandy Carielli, said protecting against a range of attacks; protecting web applications, mobile apps, and APIs; and leveraging machine learning have all become table stakes.

“Modern bot management tools must keep up with ever-evolving attacks, offer a range of out-of-the-box and customizable reports, and enable human end customers to transact business with little friction or frustration,” she continued.

Boote noted that botnet defence requires cooperation and vigilance. When the number of compromised devices numbers in the hundreds of thousands or millions, solving this problem goes beyond a single user or business. On one side, devices owned by users and businesses need to be patched and monitored to prevent the takeover by botnet software.

“They should rely on up-to-date malware signatures to keep their devices and networks clean and practice good digital hygiene when possible. The victims of botnet attacks must work closely with their ISPs and content distribution network providers to blunt the worst of the attacks,” he added.

Hansen believes that at the end of the day, to protect their customers' experience and data, online businesses should have proper bot detection and mitigation measures in place.

Managing the bot wars

Should bots be the responsibility of the CISO or the CIO?

For Hansen, this responsibility is typically split between two stakeholders – the security team that’s responsible for application security, and the digital team that represents the digital presence of the organisation.

“The latter team looks at the issue from a financial perspective – for instance, what sort of impact the bots have on their ability to transact cleanly with their customers, and what sort of potential revenue loss they could experience if the bots are not dealt with appropriately,” he added.

Boote opined that with a problem as big as botnets, ultimately the COO, CIO, or CISO would oversee all the departments that can be affected by and contribute to botnets and counter-bot responses.

“Their IT department will keep servers and employee devices clean from any botnet malware. Their Operations and Infrastructure teams will be responsible for coordinating network defences against botnet attacks. Their application development teams will need to build software that resists credential stuffing and site scraping,” he concluded.