One of the innovations of the internet is the introduction of software robots or bots that allow repetitive tasks to be performed routinely without human intervention. But like many other technologies, a good thing can sometimes be turned to do bad things.

According to the 2022 Imperva Bad Bot Report, bad bots accounted for a record-setting 27.7% of all global website traffic in 2021, up from 25.6% in 2020. The three most common bot attacks were account takeover (ATO), content or price scraping, and scalping to obtain limited-availability items.

The very real threats of bad bots

Garrett O'Hara, field chief technology officer (CTO) for APAC at Mimecast, says bots are particularly dangerous, though, because of the scale that they can act. The huge volume of traffic and the things that they involve themselves with have significant impacts on businesses.

“There are loads of nefarious activity happening from bad bots across things like account takeovers, identity fraud, and automated fraud. The impact can be significant for organisations and ultimately affect citizens of many countries, then consumers, and then the employees of many companies,” he continued.

How bots have evolved since the pandemic

Recalling how the early days of the COVID-19 pandemic forced businesses, governments, and consumers to go digital very quickly, O’Hara observed that hackers leveraged this and targeted shopping and services sites.

“Bad bots benefit from the evolution of technologies such as artificial intelligence (AI) and machine learning (ML) to allow very good mimicry of human behaviour in their attacks, resulting in more successful and profitable attacks,” he added.

In response to the increased sophistication of attacks, he cited countermeasures by banking organisations like looking at biometric signals and signatures of typing on a keyboard.

“The rate of typing by bots will be consistent because it's programmed to type certain letters. Whereas humans don't space their keystrokes perfectly,” he explained.

Technology is blind to usage

AI has found its way into use cases like chatbots and self-service to mimic how humans like to communicate. The same techniques have also been applied in the fight against bad bots. However, it is here that things may have gone awry.

As it turns out, hackers are using the very same techniques and technology to try and foil attempts to identify and stop bad bots from achieving their purpose (read the 2022 Imperva Bad Bot Report).

O’Hara explains that some attackers are using the same approaches to introduce the randomised keystrokes or the randomised movement of a mouse or the delays before clicking on a button.

“What you're fighting against programmatically is a bot attaching itself to a website and trying to undertake a transaction or to scrape some data. Often what's being used is a headless browser (not a normal browser). It doesn't have a user interface. It doesn't show the web page, but it knows how to interact and mimic JavaScript and mimic the interactions that a human would have with a website."

Garrett O'Hara

“What you need is something that's going to be able to look (detect) at that,” he elaborated.

Mitigate the risks of bad bots

Given that hackers are using the very same techniques and technologies built to thwart their efforts, how does one fight escalating warfare – one where the threats and rewards are real for both sides?

O’Hara offers several options including the use of dedicated bot management solutions. He cautions that the solution will likely depend on factors such as the size of the problem, the type of organisation, and the potential impact for bots.

He suggests considering technologies that can analyse all the traffic and the entire scope of where a bot could interact.

“When you're looking at more advanced protections, you also need to consider both server-side and client-side technologies. Programmatically, we are fighting a bot attaching itself to a website. There are active challenges: as interaction is happening, you’re supposed to be injecting things that only a human can detect,” he explained.

How far will you trust a bot?

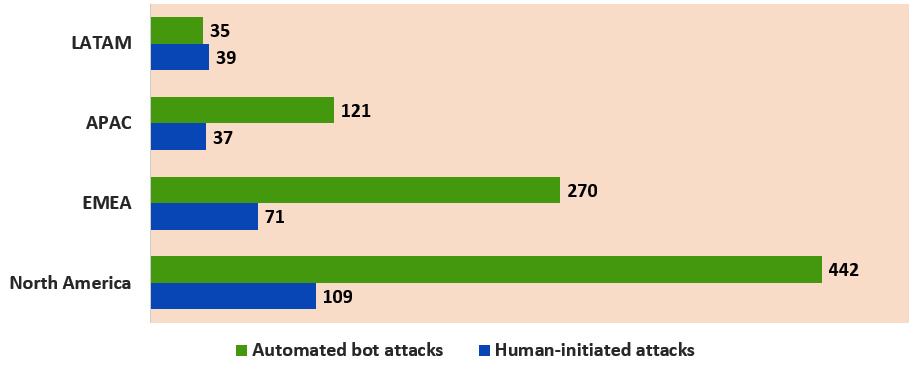

According to Statista, the volume of human-initiated and automated bot attacks in Asia-Pacific during the first half of 2020 was 37 million and 121 million respectively. With such a volume, it is only natural for the IT and security teams to use technology, including ML and AI, to detect and counterattacks.

Source: Statista

Asked to what extent IT and security teams can trust the recommendation of bots, O’Hara said the answer depends on where the person sits.

As a technologist, he concedes that he sits in the middle of the debate. He believes that the utility of ML and AI is significant for pattern recognition and automatic response. “In the case of a security operations centre (SOC), you want to choose responses that are well-documented and detailed, and not cause greater damage,” he suggested.

The same thing applies to bots. “Security systems should not introduce friction to real users. We want our customers to be able to buy things without introducing so many checks that they end up getting frustrated. Hence, it is all about maintaining the balance between security and ensuring that businesses continue to operate successfully,” he continued.

Click on the PodChat player and listen to O’Hara provide common but rarely discussed techniques to identify and combat bad bots.

- Among the many kinds of cyber threats on the internet, why are bots dangerous?

- How have bots evolved since the pandemic? Are bad bots today any worst compared to before 2020?

- How do (a) users and (b) businesses contain/mitigate the risks of bad bots?

- Given that smart bots are the technology that use AI to do these good or bad. Around cybersecurity, at what point can we trust an AI-based security solution to do remedial things automatically?

- Do you see zero trust being applied to bot security?

- Evasive bots are a growing concern. How do you see the evasion techniques of bots evolving in the coming years, and how can organisations do a better job of detecting them?