Solving complex problems requires loads of data and strong computational power. So, it is not surprising that the convergence of artificial intelligence (AI) and high-performance computing (HPC) has sparked tremendous interest across all industries.

It is easy to see why. HPC simulations rapidly generate valuable data that AI algorithms models can ingest to create AI models. AI can help to improve the quality of HPC simulations and make them more effective by focusing on significant parameters in the simulation data.

The symbiotic relationship offers a valuable tool for scientists and researchers tackling complex problems.

The advent of artificial neural networks (ANNs) — multi-layered fully-connected neural nets that closely approximated human thinking — is a prime example of this convergence. By using HPCs to quickly process large datasets, AI models based on ANNs are helping scientists to find answers to complex questions in chemistry, biology and physics.

But it is not just a matter of charting a way through a maze of previously unsolvable problems. The reality – in situations such as the rapidly expanding and unpredictable nature of the COVID-19 pandemic – means that new fixes have to be applied as soon as they are found.

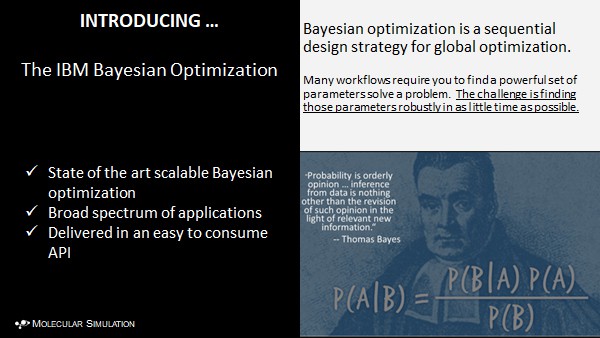

It is here that advances in intelligent simulation and Bayesian optimisation are creating a paradigm shift in HPC simulation to reduce time to discovery, time to insight, and to deliver higher levels of quality.

The convergence of all of these now ushers a new renaissance in scientific discovery that allows businesses and the human community to hurdle past seemingly insurmountable barriers — like the ones we face in our current fight against COVID-19.

New parameters to tackle entrenched challenges

At the recent IBM Singapore Supercomputing Virtual Forum 2020, Dr Dario Gil, director of IBM Research, emphasised the importance of the AI-HPC convergence in helping researchers and scientists revisit nagging problems that have gone unchallenged over the years.

With ANNs, companies can now tackle computer vision, speech recognition, and machine translation. They are also fast-evolving, with the introduction of convolutional neural networks that are used in two-dimensional data, and generative adversarial networks that can be used to win games or even deceive.

The combination has allowed scientists to simulate complex physical and biological systems, “deepening our understanding of our living space,” said Dr Gil. It is also helping to save lives.

“We can appreciate that HPCs are being used in a whole variety of applications that can have great impact, spanning all industries and sectors,” he added.

Indeed, AI and HPCs are hard at work combating cancer. Dr Gil noted that HPC simulations of interactions between “thousands of pairs of molecules” is helping scientists “to recognise the human leukocyte antigens which are expressed on the tumour cell surfaces”.

While speeding up research, it is also assisting the medical community to create personalised immunotherapies for effective treatment.

AI and HPCs are also helping the scientific community to address COVID-19. IBM is part of the COVID-19 HPC Computing Consortium where leading vendors, academia, the U.S. Department of Energy and federal agencies like NASA and National Science Foundation are pooling their HPC resources, AI models and expertise to explore various projects, from computational protein engineering to driving vaccine research.

“We have aggregated 483 petaflops of computing power and counting. By working with this capacity and collaboratively with scientists, the consortium is using both AI and HPC to tackle COVID-19,” said Dr Gil.

Reframing the problem with new insights

AI and HPC are also shaping the way we interact and see our world.

In Singapore, the Agency for Science, Technology and Research (A*STAR) is helping public housing developers “to use 3-D modelling to create the best environment for residents,” said Dr Lim Keng Hui, executive director for the Institute of High Performance Computing at A*STAR.

“We used HPC supercomputers to create a unique environmental modelling platform. It enables our [Housing Development Board] partners, designers and planners to simulate the effect of environmental performance on their designs,” said Dr Lim.

The solution goes beyond commercial software which models the physics of wind flow, air temperature and solar irradiance independently.

“What we did was to create a platform to integrate the physics to simulate the environment accurately. Part of the research involves deploying hundreds of IoT sensors into the environment to validate the model,” he added.

The project won many awards and was featured in National Geographic.

Dr Lim is also integrating HPC and AI to improve ship design. “The AI takes inspiration from human designers and expert knowledge and runs interactively to evolve the design and optimise it,” he continued.

Designers are no longer bogged down by tedious design exploration work and can add inputs to optimise the design further.

The powerful combination is also helping A*STAR to fully enhance transportation design — where AI is used to virtually recreate millions of commuters and different interactions — and improve materials and chemicals development.

“For example, we combine HPC and AI to create an AI-based rapid decision-maker to ingest knowledge from literature and experts, and then use HPC to simulate the data and train the AI decision-maker,” described Dr Lim.

Such a tool helps to speed up development and move away from trial-and-error in materials and chemicals development.

Understanding the quantum impact

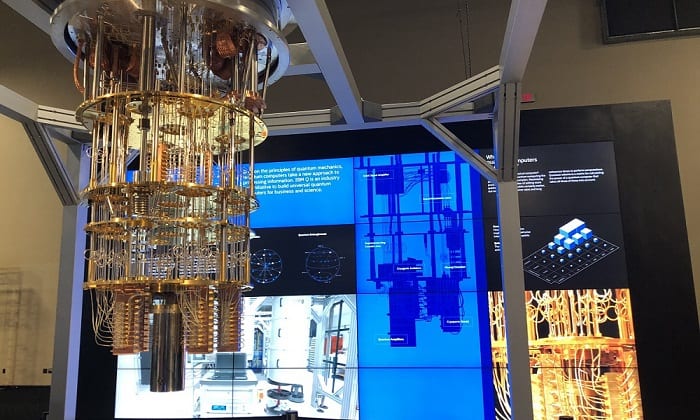

While these solutions are expanding scientists’ and researchers’ ability to find solutions to complex problems, some problems need “exponential” computation, said Dr Gil.

They require a journey from the world bits to the realm of qubits, which occupy the world of quantum computing. A qubit is a unit of information in quantum information theory.

“The world of bits has a definitive state. In the world of qubits, we explore a different set of principles,” said Dr Gil. The principles include superposition, interference and entanglement.

“The principle of entanglement is particularly interesting and powerful. Because there is no classical analogue to it. By being able to code that principle information into the quantum computer, we tap into an exponential property,” said Dr Gil.

For example, 100 perfectly entangled qubits can represent the “richness of the states” that in bits will require every atom on planet Earth to store 0s and 1s. You need every atom “of the known universe” for 280 perfectly entangled qubits, said Dr Gil.

“The reason this matter is that the problems we are going to solve from an information theory perspective are easy and hard. Hard problems are ones that have exponential numbers of variables present. A class of hard problems is non-deterministic polynomial-time (NP) hard problems,” he explained.

“We know that modelling nature itself is a hard problem. It not only hard to compute but hard to verify,” Dr Gil noted.

Here, quantum computing can help. “Not that I am claiming that quantum computers can solve all hard problems. But that there is a subset of them that has great importance to the world that they will make a difference.”

IBM is making it easier for developers and researchers to tap into the power of quantum computing. “We are going to have libraries of quantum circuits that we are going to embed in the program to tap into the special power of quantum computers,” said Dr Gil.

In quantum information theory, a quantum circuit is a model of quantum computation (based on a sequence of quantum gates).

There are different types of circuits. Like one for machine learning applications for determining financial risks.

Levelling up: AI-HPC convergence with quantum computing

Daimler is exploring how quantum computing can create better batteries for future electric cars. Exxon is exploring quantum computing to develop the next generation of energy solutions.

JP Morgan Chase is exploring optimisation approaches using quantum algorithms, “for example creating a derivative pricing circuit relevant to them”, said Dr Gil.

The applications of quantum computing are vast and continue to expand. However, the real power lies in the convergence of HPC, AI and quantum computing.

“We know that neural architectures — the foundations of modern-day AI — are also creating a revolution on how we represent information. And qubits represent information in a completely new way by bringing the world physics and computing together. As impressive as these technologies are, the most profound implications lie in their convergence,” explains Dr Gil.

He imagines the future of commuting will be one of the bits, qubits and artificial neurons coming together, orchestrated through a hybrid cloud fabric.

“Imagine what is going to happen when they come together,” said Dr Gil.

Scaling AI

Meanwhile in this unprecedented time with COVID-19, an opportunity exists for AI-HPC convergence to solve real-world issues in areas such as medical diagnostics, genomics, molecular simulations, data fusion, biomolecular structure, and quality inspection.

Companies taking a plunge, however, must bear in mind the technology comes with a caveat.

A Boston Consulting Group (BCG) research revealed that unleashing the true power of AI requires scaling it across the entire business. Yet at this decisive stage, companies hit what BCG has termed the “AI paradox” – successful pilots are easy to achieve but scaling the solution is very difficult. This is because AI systems change constantly as they ingest data and learn from it. Seemingly isolated uses cases interact and become entangled.

Clarisse Taaffe-Hedglin, executive IT architect, IBM Systems, pointed out the IBM HPDA Reference Architecture offers a framework for designing, deploying, growing and optimising infrastructure for HPC, AI and Cloud.

The framework is created in collaboration with the world’s healthcare and life sciences institutions, and using Red Hat OpenShift, IBM Power Systems, IBM Storage and Open API endpoints.

Taaffe-Hedglin noted that most AI models when progressing through stages from prototype to production are developed on laptops by data scientists in small environments where computing power is limited to just a few CPU – or possibly a GPU.

“What we want to do is to be able to get those models trained at a much better and higher scale to get better accuracies and faster time to resolve. IBM delivers AI at scale through various tools that all work in concert,” she said.

The way forward

Gartner predicts that by 2023, AI will be one of the top workloads that drive enterprise infrastructure decisions. But as enterprises scale their needs, AI will require a rethink on the part of businesses to make sure that the infrastructure is able to dynamically scale and keep up with the performance requirements.

IDC says the only certainty in all of these is that omnipresent homogeneous general-purpose computing resources have no place in this future. Organisations must, therefore, look at re-architecting their infrastructure strategy to support AI workloads.