Sophos has uncovered multiple apps masquerading as legitimate ChatGPT-based chatbots to overcharge users and bring in thousands of dollars a month.

The company dubbed these scam apps “fleeceware” because the free versions have near-zero functionality and constant ads, they coerce unsuspecting users into signing up for a subscription that can cost hundreds of dollars a year. These apps have popped up in both the Google Play and Apple App Store.

“Scammers have and always will use the latest trends or technology to line their pockets. ChatGPT is no exception. With interest in AI and chatbots arguably at an all-time high, users are turning to the Apple App and Google Play Stores to download anything that resembles ChatGPT,” said Sean Gallagher, principal threat researcher, Sophos.

Use of social engineering and coercive tactics

First discovered by Sophos in 2019, the key characteristic of fleeceware apps is overcharging users for functionality that is already free elsewhere, as well as using social engineering and coercive tactics to convince users to sign up for a recurring subscription payment.

Usually, the apps offer a free trial but with so many ads and restrictions, they’re barely useable until a subscription is paid. These apps are often poorly written and implemented, meaning app function is often less than ideal even after users switch to the paid version. They also inflate their ratings in the app stores through fake reviews and persistent requests of users to rate the app before it’s even been used or the free trial ends.

“Fleeceware often bombard users with ads until they sign up for a subscription. They’re banking on the fact that users won’t pay attention to the cost or simply forget that they have this subscription. They’re specifically designed so that they may not get much use after the free trial ends, so users delete the app without realising they're still on the hook for a monthly or weekly payment."

Sean Gallagher, Sophos

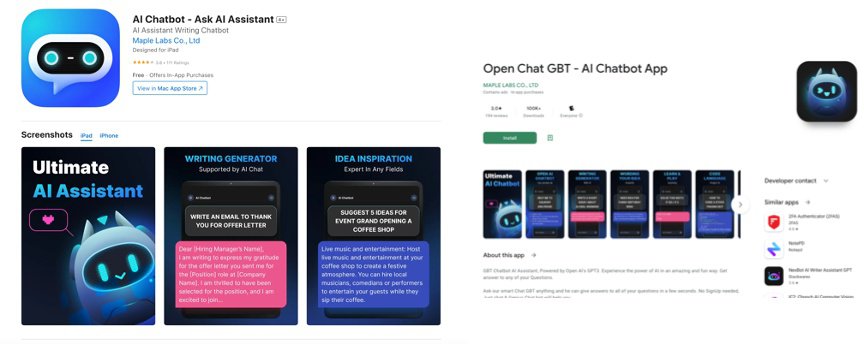

In Sophos X-Ops' latest report entitled “FleeceGPT’ Mobile Apps Target AI-Curious to Rake in Cash”, five of these ChatGPT fleeceware apps – all of which claimed to be based on ChatGPT’s algorithm – were investigated.

The report revealed that developers played off the ChatGPT name – such as in the case of the “Chat GBT” app – to improve their app’s ranking in the Google Play or App Store. While OpenAI offers the basic functionality of ChatGPT to users for free online, these apps were charging anything from US$10 a month to US$70.00 a year. The iOS version of “Chat GBT,” called Ask AI Assistant, charges US$6 a week—or US$312 a year—after the three-day free trial; it netted the developers US$10,000 in March alone. Another fleeceware-like app, called Genie, which encourages users to sign up for a US$7 weekly or US$70 annual subscription, brought in US$1 million over the past month.

All apps included in the report have been reported to Apple and Google. For users who have already downloaded these apps, they should follow the App or Google Play store’s guidelines on how to “unsubscribe.” Simply deleting the fleeceware app will not void the subscription.

According to Gallagher, fleeceware apps are specifically designed to stay on the edge of what’s allowed by Google and Apple in terms of service.

“They don’t flout the security or privacy rules, so they are hardly ever rejected by these stores during review. While Google and Apple have implemented new guidelines to curb fleeceware since we reported on such apps in 2019, developers are finding ways around these policies, such as severely limiting app usage and functionality unless users pay up,” he added.

Some of the ChatGPT fleeceware apps included in the latest Sophos X-Ops report have already been taken down, but more continue to pop up—and it’s likely more will appear.

“The best protection is education. Users need to be aware that these apps exist and always be sure to read the fine print whenever hitting ‘subscribe.’ Users can also report apps to Apple and Google if they think the developers are using unethical means to profit,” said Gallagher.