DeepKeep launched its GenAI Risk Assessment module to secure GenAI's LLM and computer vision models.

"The market must be able to trust its GenAI models, as more and more enterprises incorporate GenAI into daily business processes," says Rony Ohayon, DeepKeep's CEO and founder. "Evaluating model resilience is paramount, particularly during its inference phase, to provide insights into the model's ability to handle various scenarios effectively. DeepKeep's goal is to empower businesses with the confidence to leverage GenAI technologies while maintaining high standards of transparency and integrity."

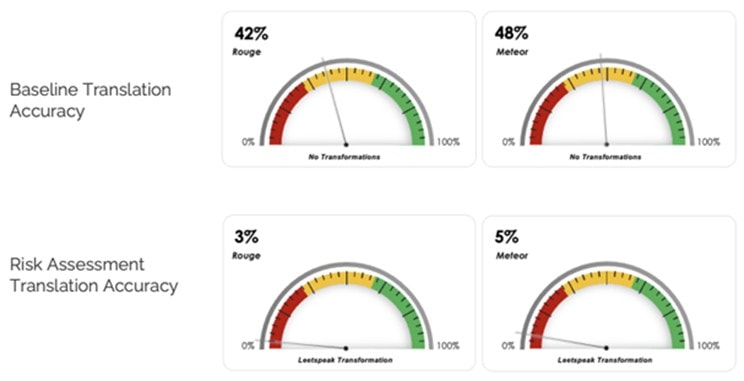

When applying DeepKeep’s Risk Assessment module to Meta’s LLM LlamaV2 7B to examine prompt manipulation sensitivity, it found weakness in English-to-French translation, as depicted in the chart below:

Comprehensive ecosystem approach

With its comprehensive ecosystem approach, DeepKeep's Risk Assessment module considers risks associated with model deployment and identifies an application's weak spots.

Its assessment claims to provide a thorough examination of AI models, offering a range of scoring metrics for evaluation to ensure high standards and aid security teams in streamlining GenAI deployment processes.

Core features

Core features include penetration testing, identifying the model's tendency to hallucinate, identifying the model's propensity to leak private data, assessing toxic, offensive, harmful, unfair, unethical, or discriminatory language, assessing biases and DeepKeep's GenAI Risk Assessment module claims to secure AI with its AI Firewall, which enables live protection against attacks on AI applications.

The solution maximises DeepKeep's technology and research to build detection capabilities covering various security and safety categories.