IDC predicts that by 2025, 90% of global organisations will be staring down the barrel of a crippling IT skills crisis. "The cost: More than US$6.5 trillion in missed product releases, impaired competitiveness, lower customer satisfaction, and missed revenue goals. From security and data to AI, cloud, and more, tech talent is at a premium," said the analyst.

For some quarters of enterprises, Generative AI (GenAI) may hold the key to alleviating this skills shortage either by assisting IT teams to accelerate the development and testing of applications or letting the general "enterprise population" themselves use GenAI to develop the applications they need to run their jobs.

Acknowledging that traditional AI development is straddled by limited available technical skills in the market, McKinsey senior partner, Lareina Yee, says contrasted this to GenAI where despite the need for highly skilled people to build large language models and train generative models, users can be nearly anyone, and they won’t need data science degrees or machine learning expertise to be effective.

Why GenAI

Beyond the IT skills shortage, Mike Assin, a principal at Heidrick & Struggles Hong Kong, says beyond AI's potential to identify talent and lead to greater objectivity, GenAI provides a near real-time perspective on employee sentiment about organisational culture, improving employee engagement.

Frank Windoloski, EVP for Insights & Data for APAC at Capgemini says GenAI empowers human creativity which drives value creation through innovation and boosts productivity through tasks like content creation and automation.

The caution in GenAI

As powerful as the technology is, Endava CTO Matthew Cloke, cautions that AI cannot eliminate the issue of misinformation since it retrieves its data from public internet sources.

"Its wide influence also poses cybersecurity risks, especially with the lack of regulations or a governance framework in place to navigate misinformation and data privacy concerns," he continues.

It is this lack of regulation that Paul Simos, VP and MD of SEAK at VMware, cautions that without a framework, data output results in various ethical concerns around accuracy, inclusivity and regulation.

How to deploy GenAI in the enterprise

Cloke cautions that not all business processes require aggressive AI implementation. He suggests leaders adopt a deliberate approach when it comes to deploying AI, starting with the simpler and more routine tasks, and then scaling progressively to sophisticated AI-driven processes in a low-risk way. As part of this process, fostering stakeholder engagement and upskilling is also vital to ensure that they can adapt to the new tools.

For his part, Assin leaders need to muster their digital dexterity to adapt and stay ahead of disruptive trends such as AI. He opines that even as AI continues to evolve and its output become more optimised, relationships and judgement remain critical to its success.

He adds that it’s important for leaders to track its progress, educate and clearly communicate AI’s new opportunities, risks, and policy changes to internal and external stakeholders.

Windoloski calls for upskilling engineers on newer ways of model learning and optimisation, training employees on the use of prompts and output validation and encouraging experimentation.

What about compliance?

Ran Xu, a director of research in the Gartner Risk & Audit Practice, says that the mass availability of GenAI, including ChatGPT and Google Bard, has become a top concern for enterprise risk executives in the second quarter of 2023.

To counter or contain the risks, Cloke suggests building a cyber-aware culture is necessary for organisations to navigate the evolving challenges of AI.

"The more intelligent and powerful the technology becomes, the more crucial it is to anticipate heightened cybersecurity threats, including deepfake-based attacks. As a substantial portion of risk originates from human error, organisations should train their employees to pre-empt exploitation and mitigate these threats."

Matthew Cloke

Assin suggests CIOs prioritise the responsible use and compatibility of their generative AI systems to ensure that they keep up with evolving regulations. "While established regulations around Generative AI will come with time, CIOs have the responsibility to build internal ethical principles and risk guardrails to be used during its development and deployment phases," he continues.

Simos says auditing will be a key governance mechanism to confirm that AI systems are designed and deployed in line with a company’s goals. He adds that use cases for generative AI should be evaluated by members of the security team, who are familiar with the risks of AI and data, to protect the organisation.

Balancing the desires for innovation, opportunity and reality

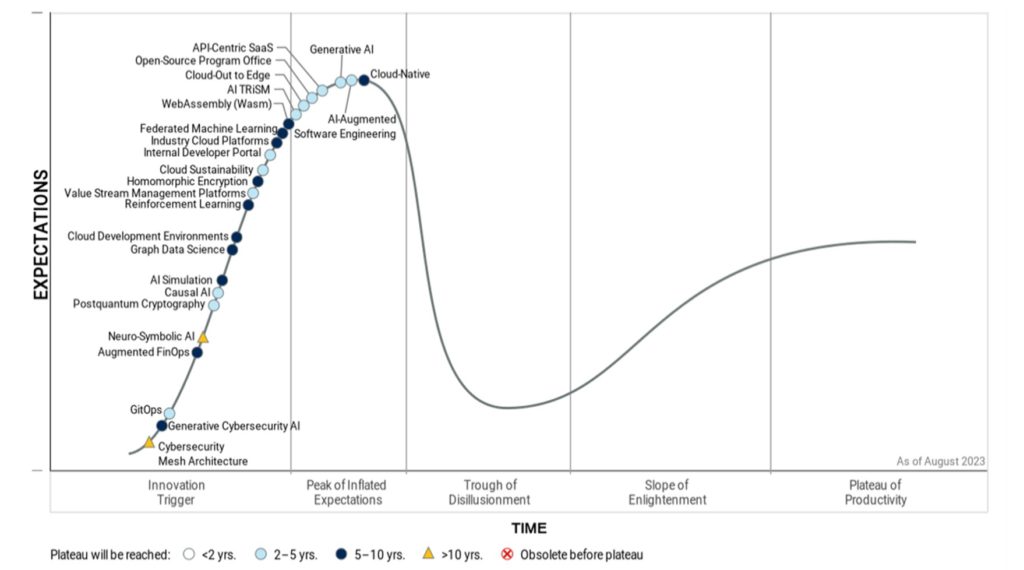

In the Gartner Hype Cycle for Emerging Technologies 2023, the analyst positioned GenAI on the Peak of Inflated Expectations.

Said Arun Chandrasekaran, distinguished VP analyst at Gartner: “The massive pretraining and scale of AI foundation models, viral adoption of conversational agents and the proliferation of generative AI applications are heralding a new wave of workforce productivity and machine creativity.”

While conceding GenAI as a powerful tool that can amplify their employees’ capabilities, Endava's Cloke cautions adding AI is not a replacement for human creativity and decision-making. He suggests engaging the right experts for advice and ensuring the right skills are in place to manage AI tools effectively will allow organisations to reap the benefits of the technology.

To ensure greater clarity on Generative AI adoption, Assin believes it is necessary for leaders to set up a clear strategy for the deployment of Generative AI including its scope, timeline, metrics, and success. Against the backdrop of the inevitable integration of GenAI in employees' day-to-day work, organisations should focus on the risks and rules for the technology’s use and adoption.

"It is crucial to find solutions that satisfy businesses’ needs in a safe environment. This requires implementing local instances of AI tools, to ensure that the data given to the AI stays within a controlled environment."

Mike Assin

While endorsing the fostering of a culture of experimentation, VMware's Simos believes that regularly assessing the feasibility, ethical considerations and potential impact of AI-generated ideas ensure that innovation remains grounded.

"Effective communication, collaboration and a willingness to iterate are essential in guiding the integration of generative AI to create real-world value without losing sight of practicality." Paul Simos

In this, he points to the CTO organisation as the key to the assessment of the risk and feasibility of these technologies and validating which public AI/private AI technologies are safe to use to ensure the privacy and security of customer data.

Takeaways

Capgemini's Windoloski reminds us that GenAI is not “intelligent” in itself. "Humans need to build safeguards around them to guarantee the quality of their output. GenAI content must also be reviewed and tuned for accuracy before use.

He cites three approaches to securing GenAI:

Governance and Tailored Guidelines: Establish secure Generative AI use with custom guidelines for functional, legal, and ethical risks.

Bespoke Solutions: Craft custom, business-driven solutions rather than relying on publicly available tools to ensure reliability, security, and control. Verify data output against established guidelines to further refine and improve Generative AI tools.

Human Expertise and Diversity: Build safeguards with diverse teams of AI experts with extensive cross-domain machine learning experience, countering bias, and ensuring ethical trust.