At a recent media briefing, Elastic’s Ravi Rajendran, area vice president for ASEAN joined Chris Walker, vice president of architectural solutions for APJ, to present some of the key findings from a Vanson Bourne study on the data challenges organisations faced in the region.

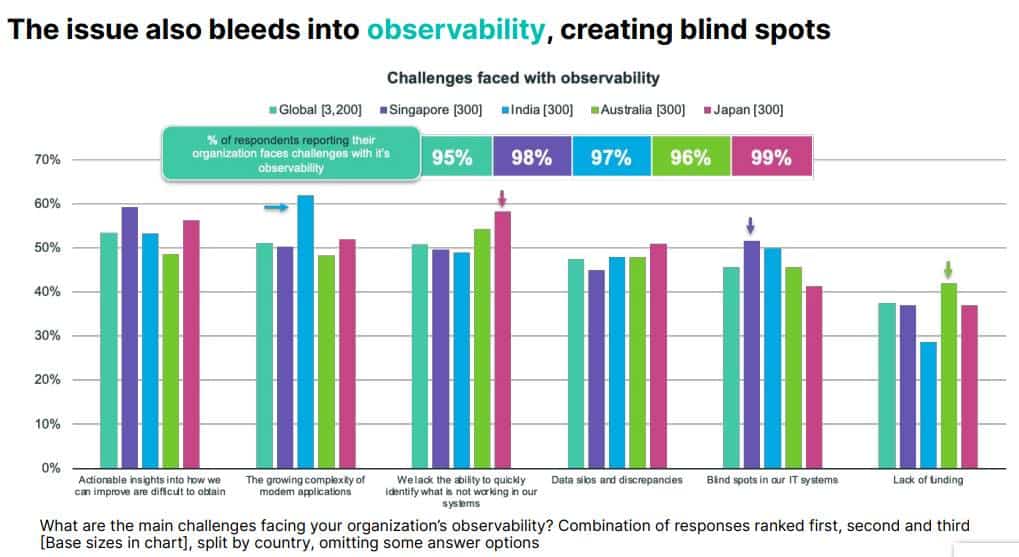

One of the topics covered in the survey focused on identifying challenges organisations faced around observability. Observability is the extent you can understand the internal state or condition of a complex system based only on knowledge of its external outputs. The theory is the more observable a system is, the more quickly and accurately you can identify a problem and its root cause, without additional testing or coding.

IBM says observability provides deep visibility into modern distributed applications for faster, automated problem identification and resolution.

Gartner defines observability platforms as products used to understand the health, performance and behaviour of applications, services and infrastructure. They ingest telemetry (operational data) from a variety of sources, including but not limited to logs, metrics, events and traces.

Observability solutions are used by IT operations, site reliability engineers, cloud and platform teams, application developers, and product owners.

At the Elastic media briefing, following the presentation, Rajendran and Walker took questions from FutureCIO.

How do you balance observability and privacy?

Chris Walker: The role of observability is to ensure that the IT systems and applications under surveillance are performing as intended. Once observability is implemented, consideration must be given to the data being collected.

"One should critically assess whether collecting certain data, potentially sensitive or personal, aligns with privacy concerns. For instance, is it necessary to collect credit card information through applications? This type of data might not be relevant to observability objectives and, therefore, should not be collected."

Chris Walker

Alternatively, there may be situations where data collection is warranted, but it should be anonymised or masked within the observability system to prevent unauthorised access. This approach ensures that sensitive information, such as financial data, is only accessible to those with a legitimate need to analyse it.

Ravi Rajendran: Additionally, implementing robust security measures within observability solutions is crucial. These measures ensure that users can access only the data relevant to their roles, thereby preventing unauthorised data access.

Achieving the right balance is essential because, without observability, organisations are blind to the operational status of their systems. This lack of visibility increases the risk of exposure.

One of the key benefits of observability is anomaly detection. By monitoring various platforms, systems, networks, applications, and metrics, including logs and traces, organisations can identify and address anomalies that may indicate security issues.

Are all observability tools created equal?

Ravi Rajendran: Across various organisations that we service and engage with, we've noticed they don't rely on a single tool; they have multiple tools, sometimes as many as 15 to 20. These tools are specialised, and designated for networks, applications, systems, and sometimes there are even multiple tools within the same area but for different applications.

"One of the biggest challenges arises in how to integrate this data into a unified dashboard to correlate information effectively. In the event of an incident, it becomes crucial to have a system in place that not only gathers but also intelligently analyses data from these disparate sources."

Ravi Rajendran

The key for organisations is not to debate which tool is superior but to seek a platform capable of offering a unified view of all potential metrics, logs and traces relevant to their operations. This enables effective correlation of data when incidents occur.

Focusing solely on tools may suffice for basic needs, but adopting a platform approach offers a distinct advantage. The integration of observability with search and security functions significantly enhances an organisation's responsiveness to incidents. With a robust platform, the speed at which data can be searched and analysed becomes a critical feature, especially in identifying and addressing anomalies in the environment before they escalate.

Moreover, some organisations are advancing beyond reactive and proactive monitoring towards predictive analytics. They are exploring capabilities to forecast potential issues based on current trends and data. This proactive approach requires a unified platform that goes beyond traditional observability, encompassing rapid response mechanisms and advanced analytical capabilities to pre-empt security incidents.

Chris Walker: When considering a platform, it's essential to recognise the vast array of security and observability data it must handle. The challenge lies in not just collecting this data but in applying the right filters to surface relevant insights quickly.

In today's environment, it is advisable to move away from independent tools that address only a fraction of the required functionalities, such as those focusing solely on logs or Application Performance Monitoring (APM) which covers traces.

A holistic solution that can aggregate and analyse data across metrics, traces, logs, and security information is essential. Such a platform allows for a dynamic approach to data analysis, enabling organisations to respond swiftly and effectively to emerging challenges.

What is the learning curve or willingness to adopt a centralised observability tool among those in it who are responsible for monitoring telemetry coming on from different systems, including cyber security?

Ravi Rajendran: Many organisations using siloed tools must consider how to merge their data to derive relevant insights. By consolidating this data through a single platform, organisations can adjust the focus on the data to obtain the desired outcomes.

There is a notable increase in awareness of the value this approach provides, as evidenced by the number of customers we engage with who are willing to adopt a centralised observability framework. Some of the larger enterprises are even looking at establishing Centres of Excellence (CoE) specifically for observability.

For instance, a company utilising 20 different tools can greatly benefit from a platform capable of integrating these tools to enhance its observability capabilities. What we're observing in the market is a shift away from the siloed approach that creates problems, towards leveraging CoEs for a comprehensive view of an organisation's assets.

Chris Walker: Organisations that have undergone this transformation, especially those with multiple tools, often face significant costs. These costs arise from the need to train staff on various tools, manage contracts with multiple vendors, and process the data collected from these disparate sources. All these activities contribute to the overall expense.

Consequently, these organisations frequently collaborate with their enterprise architects. These teams are tasked with considering how tool consolidation can be achieved and moving towards a platform-centric worldview. This is a trend we are currently seeing among several organisations we are working with.