In a poll of 2,500 executives, 45% reported that the publicity of ChatGPT has prompted them to increase artificial intelligence (AI) investments. The Gartner survey also noted that 70% said their organisation is in investigation and exploration mode with generative AI, while 19% are in pilot or production mode.

All this interest in ChatGPT and generative AI should be cause for alarm for CISOs, those in compliance and perhaps CIOs. Some AI observers believe that AI is still a nascent technology, particularly when applied to business-critical use cases.

Frances Karamouzis, Gartner distinguished VP analyst, cautions that organisations will likely encounter a host of trust, risk, security, privacy and ethical questions as they start to develop and deploy generative AI.”

Satnam Narang, a Sr. staff research engineer at Tenable, says "ChatGPT is not inherently built for cybersecurity, but it is being used by a variety of individuals, including cybersecurity practitioners based on the large corpus of data that it has been built upon."

He added that ChatGPT can be used to supplement a practitioner’s workflow and help aid in addressing common cybersecurity issues at a broad level, but it still has a long way to go.

That in there should already be a warning flag.

Imperva CTO Kunal Anand chimed in adding that the generative capabilities in ChatGPT can help threat actors discover and iterate on new attacks faster. "There are known attempts at harnessing the technology to find and exploit weaknesses in signature-based systems like anti-phishing and anti-malware solutions," he pointed out.

On a positive note, he did concede that ChatGPT's transformer model can allow for innovations in cyber defence systems and capabilities. Leading cybersecurity companies are already implementing transformer models in their products.

Just how efficient or effective is ChatGPT against malware?

Anand acknowledged that the battle with malware developers is a cat-and-mouse game. He added that malware developers focus on evading existing detection capabilities predicated on continuously outmoded signatures and definitions.

"A GPT model can level the playing field until attackers use their own GPT model to identify and target weaknesses," he opined.

Trellix's, senior offensive security researcher, John Rodriguez, cites ChatGPT's natural language processing capabilities can potentially aid in developing code, guided investigations, and plans to combat possible cyber threats.

Given that it can be used for nefarious purposes, he suggests that organisations need to implement strong security protocols and educate employees on recognizing and responding to potential threats.

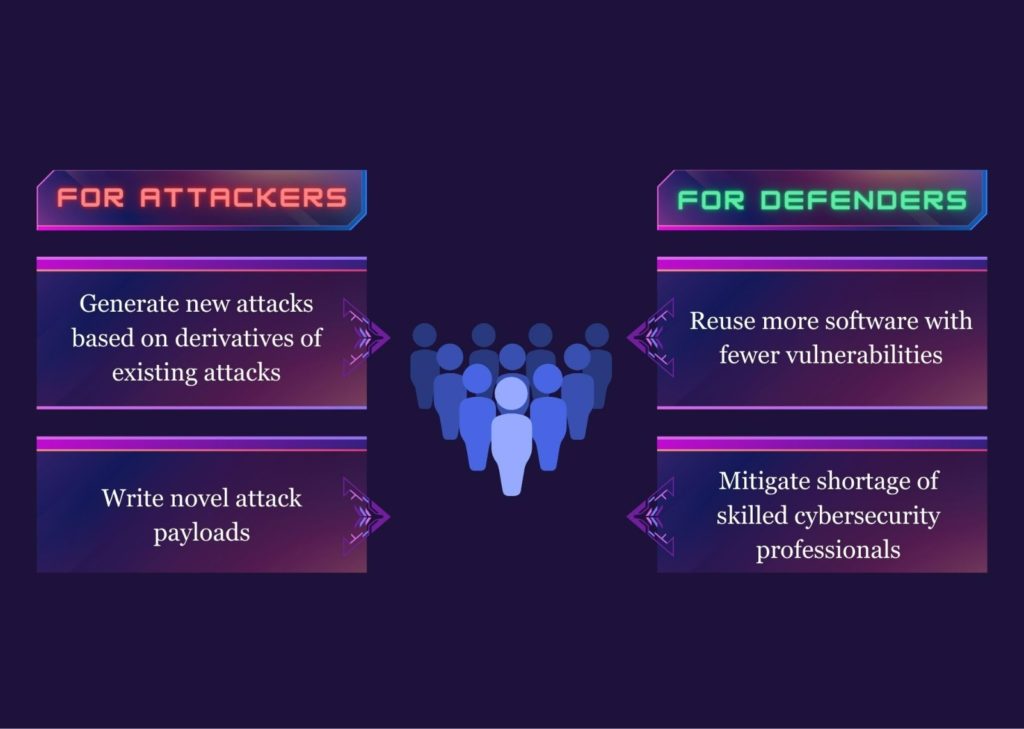

An offensive and defensive weapon

Narang says the real value of ChatGPT lies in its ability to help improve templates for phishing attacks and scams such as dating app profiles. It can also provide some guidance on conducting cyberattacks.

"Ideally, ChatGPT shouldn’t provide a step-by-step guide on how to conduct a cyberattack, but leveraging different ways of formatting their questions, users could be given enough guidance to help point them in the right direction."

Satnam Narang

"From a defensive perspective, it could be a companion in the toolbox for a cybersecurity practitioner on the defender’s side – supplementing a practitioner’s workflow and aiding in addressing common cybersecurity issues at a broad level, but it does have a long way to go still for these use cases," he continued.

When it makes sense to embed ChatGPT as part of cybersecurity

Ask what are the conditions that would justify/warrant embedding ChatGPT as part of an organisation's cybersecurity strategy, Rodriguez argues that embedding ChatGPT as part of an organisation's cybersecurity strategy can be justified if the tool can aid in complexity reduction by developing code, steps, guided investigations, and plans to combat potential threats.

With use cases such as writing secure code, generating unit and functional test code, and identifying security vulnerabilities in software, Anand raised one caveat: "An organisation should take the time to train the model with labelled (good/bad) data. By training the model, an organisation can reduce false positives and negatives, saving time and resources," he continued.

Challenges to overcome when integrating ChatGPT into cybersecurity strategy

Rodriguez lists ensuring that sensitive user data is protected, user awareness and training, and system integration. On the latter, he cautions that CISO/CIOs need to ensure that the chatbot can integrate seamlessly with existing systems without introducing new security risks.

For his part, Narang says ChatGPT continues to raise debate around privacy concerns, such as with the sharing of sensitive information like customer data. "This debate is happening as we speak, and we can expect it to continue as models like GPT-4 and further iterations come to life and expand the use-cases available, such as with sharing images instead of just text data," he added.

Advise going forward

"We don’t yet fully comprehend the short- and long-term effects and consequences, both positive and negative, of ChatGPT," began Anand.

"These teams should discuss model training safety and how to prevent employees from inadvertently sharing sensitive data. This evaluation should include a clear understanding of the use cases, benefits, and risks associated with implementing ChatGPT in their firm," he suggested.

Narang concedes that organisations are 'barrelling' towards a future where large language modules (LLMs) like GPT are incorporated into various platforms. He cautions that in the rush to adopt LLMs for cybersecurity, it remains paramount that organisations carefully consider the privacy and security ramifications of sharing sensitive information, such as customer data or trade secrets with LLMs such as GPT-3.5 and GPT-4 through ChatGPT.

Gartner recommends creating a company policy rather than blocking ChatGPT. "Your knowledge workers are likely already using it, and an outright ban may lead to “shadow” ChatGPT usage, while only providing the organisation with a false sense of compliance.

"A sensible approach is to monitor usage and encourage innovation but ensure that the technology is only used to augment internal work and with properly qualified data, rather than in an unfiltered way with customers and partners," concluded the analyst.