A new Gartner report reveals that multimodal generative AI (GenAI) solutions will escalate to 40% by 2027 from only 1% in 2023. Gartner projects multimodal (text, image, audio, and video) GenAI to enhance human-AI interaction and differentiated GenAI-enabled offerings.

“As the GenAI market evolves towards models natively trained on more than one modality, this helps capture relationships between different data streams and has the potential to scale the benefits of GenAI across all data types and applications. It also allows AI to support humans in performing more tasks, regardless of the environment,” Erick Brethenoux, distinguished VP analyst at Gartner, said.

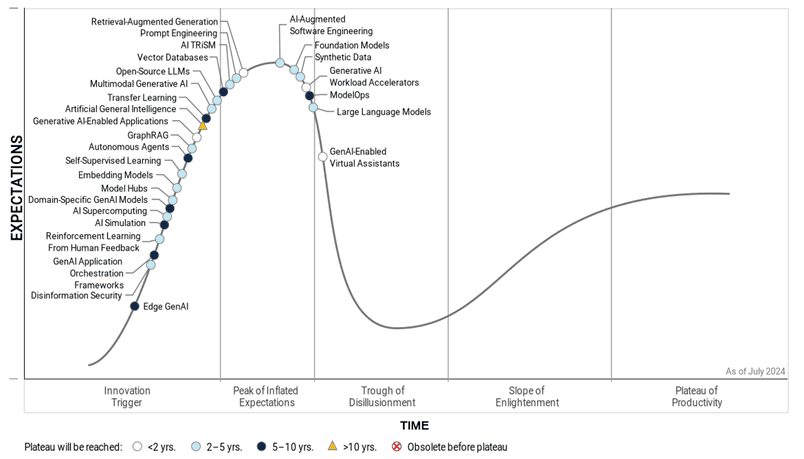

Hype cycle for GenAI

“Navigating the GenAI ecosystem will continue to be overwhelming for enterprises due to a chaotic and fast-moving ecosystem of technologies and vendors,” said Arun Chandrasekaran, distinguished VP analyst at Gartner.

“GenAI is in the Trough of Disillusionment with the beginning of industry consolidation. Real benefits will emerge once the hype subsides, with advances in capabilities likely to come at a rapid pace over the next few years,” he added.

Multimodal GenAI

Multimodal GenAI can enable additional features and functionality impacting any touchpoint between AI and humans. Gartner also projects an increase in the number of multimodal model modalities from the current two or three modalities.

“In the real world, people encounter and comprehend information through a combination of different modalities such as audio, visual, and sensing,” said Brethenoux. “Multimodal GenAI is important because data is typically multimodal. When single modality models are combined or assembled to support multimodal GenAI applications, it often leads to latency and less accurate results, resulting in a lower quality experience.”